Over 13 years, I have been training for a job that leverages AI tools to develop strategic software and business solutions. I have been using Microsoft applications to automate business needs and there must be an AI tool, either in development, being refined, or already in use, that does what I’ve been doing all along, but at extreme speed, with unlimited scalability, and makes the whole process seamless and polished. Whether it’s building a model, refining an existing one, or working in a customer facing role to implement time saving solutions, this is where I see myself making the biggest impact professionally.

Working Life:

I first gained proficiency in Visual Basic for Applications (VBA) and its capabilities during my final year at Bank of America. Thirteen years ago, I worked in a reporting department responsible for generating reports that posed challenges for the SQL group. Sometimes, these reports required complex formulas, and in other instances, frequent changes in reporting requirements made it challenging for the data extraction experts to keep up. As a result, a team of 12 of us had to manually compile reports after receiving database downloads.

I tend to get acquainted with everyone in my workplace and establish connections with people from different departments. Through one of these connections, I was introduced to a piece of ECL (Enterprise Control Language [At least I think that’s what it’s called]) designed for screen scraping the AS400 User Interface (UI) and transferring the data to Excel. Initially the code did not work but after investing several months of learning and refining this code, I made it functional and adaptable for extracting various banking data through the AS400 UI. I accomplished this by adding AutECLPS.Wait ## statements in strategic locations throughout the code. Without this, the screen would not update fast enough as the code was running, and the screen scrape would pull data from the previous screen.

In this reporting department I ran this code to try and get another department “in trouble” and through the custom reporting lined up short sales with Mortgage Insurance Claims. When there was nothing (supposedly) wrong, my manager suddenly unfavorited me. It may have also been that I wrote scripts that automated the Pivot Table Style reports I was responsible for. She told me I could not use the macros because it would create perfect work and we are graded on the “prettiness” of the reports and if I had a script that colored, lined, font-ed my reports perfectly, it would not be fair to the group. With all that, I changed departments.

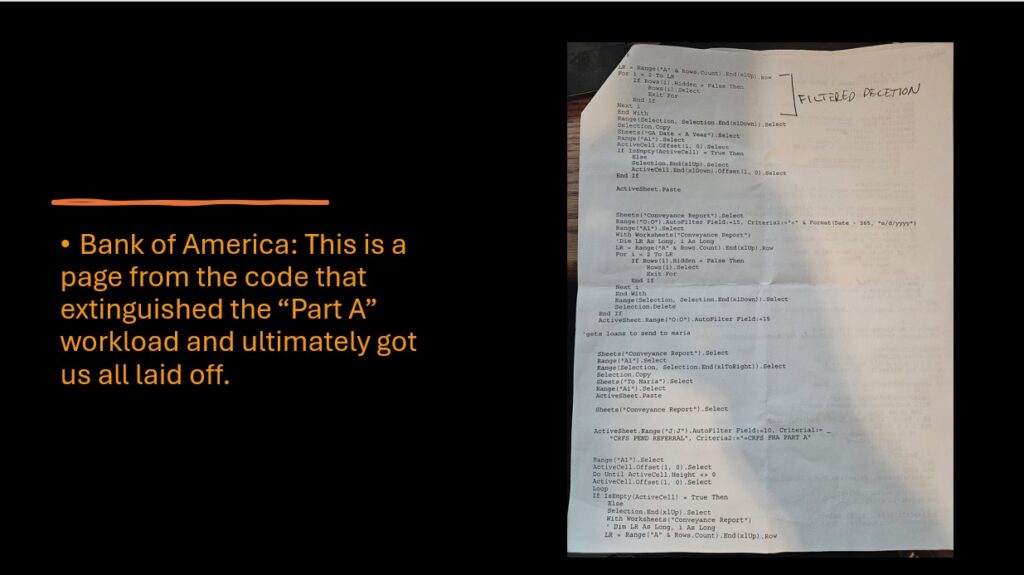

In this new department, focused on Housing and Urban Development and FHA Part A, I identified workflow inefficiencies that could be improved to save time for the entire Unit. The key process to enhance involves one manager receiving a report in the morning, refining it, and emailing it to five other managers, each overseeing teams of 12 employees. Creating this detailed report manually took around two hours, causing approximately 60 people to wait before starting their daily tasks. I proposed to my manager that I could write a VBA script to automate this report generation if I could be relieved from my other responsibilities. I spent some time completing the script, and once it was done, the unit had a tool to generate the day’s work for each team with the click of a button after the SQL group sent us the daily report (usually before most of the group arrived for work). This not only accelerated the distribution of information but also allowed me to learn what they were looking for in the loans they were receiving from the SQL group.

With the extra two hours a day the group finished work early and no one was getting overtime. I found out the loans that were sent to us from the SQL group were mostly getting kicked to us because they were stuck somewhere, and it would take someone manually changing the loan codes based on comments left of the file. The next report I created, Implemented the AS400 Screen Scrape scripts I had enhanced, a list of all potential Part A loan numbers, and conditional statements with wildcards to identify indicators in the comments section of AS400. This allowed us to drill down on the loans that could be worked. The loans that needed to go to another department were cataloged as well.

In the past this was a manual process, and each loan had to be opened by the group and researched to see if it could be worked. This reporting method completely removed all backlogged Part A claims in about 2 weeks, and they laid off all but 6 people in the department. My “scorecard” showed I had remedied zero “part A” claims and I was laid off with the bunch. The best part is, after I had created the scripts and implemented them my unit manager said I was not supposed to be doing this and could be fired if anyone finds out. So, in my exit interview I confirmed I did not do any part A claims and disgruntled with my entire BofA experience, I took the walk. I’m pretty sure someone else got the credit for my work.

After Bank of America, I worked for my dad for a little over a year at Roberts events running live audio, while maintaining the shop. I made some simple excel sheets, maybe a macro here or there, showcasing break even points in rent vs. buy comparisons with the shop gear. The suggestions I made backed by numbers were not acted upon and I realized working for the family was not going to work out if they did not want to make more money.

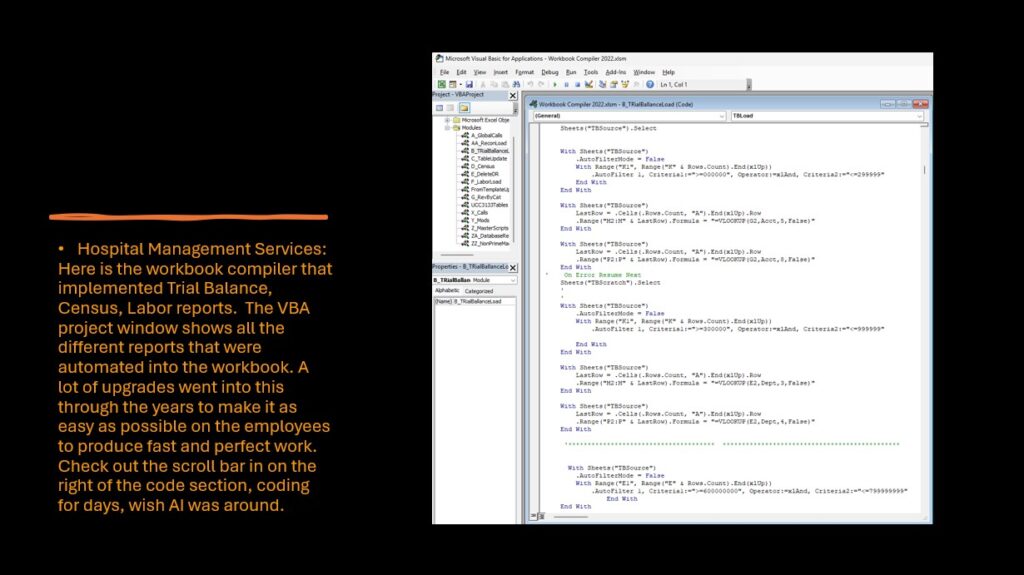

The last job I had was Hospital Management Services (HMS). I started as a 1099 employee and was brought on full time once I proved I could automate some tasks. My first Project was getting a Hospitals Trial Balance, Census, and Labor report into a consolidated workbook to submit a Medicare / Medicaid Cost Report to the Federal Government. The reports were due May 30th, and we received the input reports from the hospitals between February and March. The CEO said that HMS was constantly submitting late cost reports because of the setup time. Not only did it take the staff about 2 months’ time to set the workbook up, but the workbooks were all built differently depending on who put it together, making it hard to find information. To complicate things the 3 input reports were sent to us with different file types and column information in different orders. I wrote a script for each hospital that took the hospitals 3 input reports and set up a workbook with tabs showcasing the 3 reports dissected into pivot tables, tables, single number Drilldowns according to accounting parameter, and added information coming from Crosswalk tables. I set it up so all the reports would be ready at the same time and every report had the same format. The best part was I completed all 35 workbooks in 2 days’ time. This would have taken the team of fourteen two months to complete with variations of the reports depending on who created them. There were no more late reports or variations after the implementation of this automation and we saved over 1000-man hours, mostly in overtime with this automation.

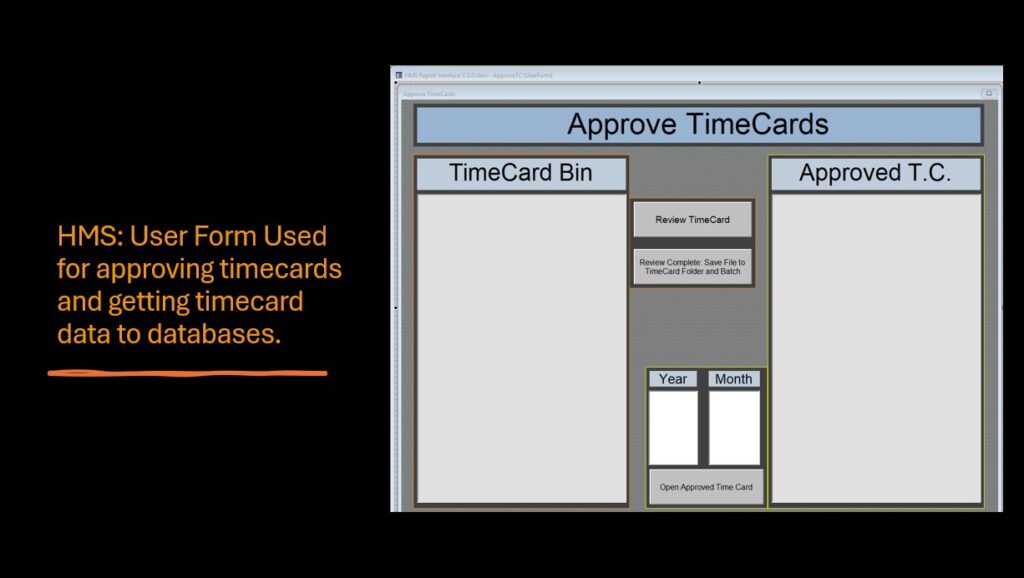

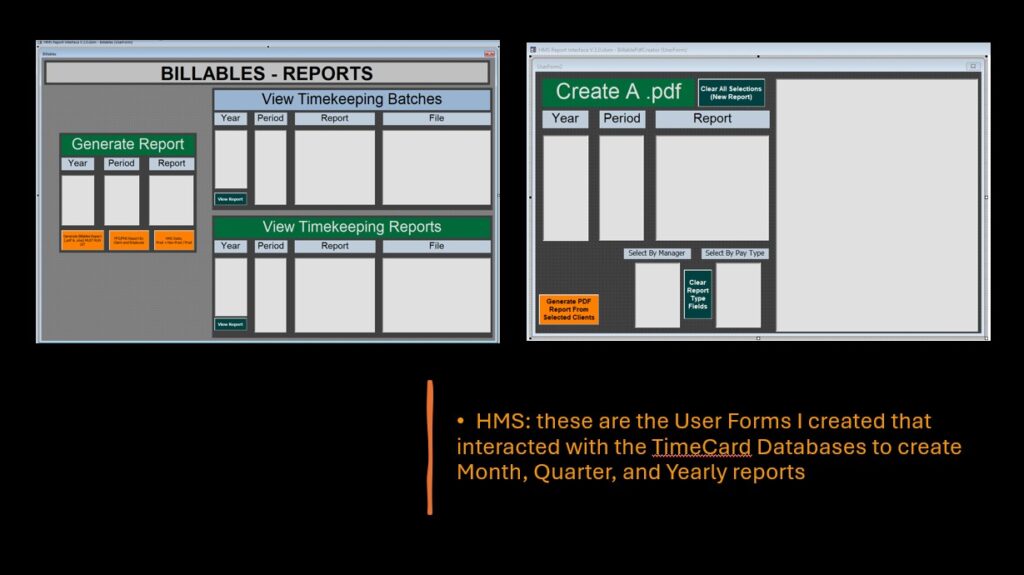

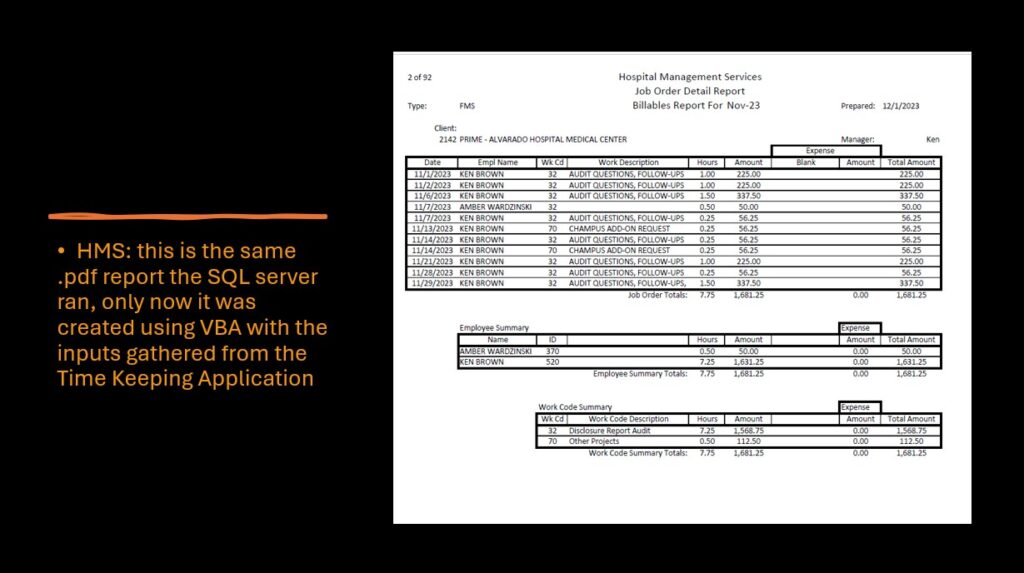

I learned in school that if you want to have a good business, it must be measured. I asked the CEO how the work and employees were measured. He advised it was by word of mouth or email and him reviewing the billable and non-billable information in the employee timecards. I told him how we need to start measuring time and efforts and make the information available at any given time. He said that we were going to have to wait because he was tired of using the SQL server and having three people spend one or two hours each on the input of the billable information. The only reason he kept the SQL server around was because it created a .pdf document of the invoices sent out to clients showing billable hours and total amounts owed to HMS. They were already using an excel sheet to track the employee’s time. Employees would attach the timecard file to an email at the end of the day to be forwarded around, eventually making it into the SQL server through manual data input. The CEO asked if I could atomate this 2-hour process out of the morning and I created a timecard that looked the same but with a button click would submit the timecard to a file folder on the shared network. I Then created a User form that interacted with the shared network and displayed the timecards to select and open for review. If everything looked good clicking the “review complete” button would database the Timecard. Approving a timecard also created file folders if they were not already present from a previous submission. The information went to a folder that housed all of the original format submitted timecards and then data based the information using auto created excel docs. The folders and files that were used to house the information was broken up into Daily, Monthly, Quarterly and Yearly Excel files all updated after the reviewer clicked the “Review Complete” button.

I then developed a month / year – end reporting application through an Excel User Form where folders housing the timecard data was accessed. I created the exact same report in .pdf format the SQL server was producing along with Productivity and Accounting Type reports. I also took the opportunity with the overhaul to add columns to the timecard to indicate the status, date of status change and the previous status of the workbook being billed. When a timecard was submitted If / Then statements were ran and tested who was sending the workbook and if the status changed in a way the assistant / manager would need to be notified. Depending on the Previous status and the new status an email was auto generated, indicating change, and sent to the appropriate recipient. This removed the manual process of emailing or calling someone to let them know the file was ready to work. Much time was saved with this and far less things were forgotten about. Buttons were also added to the timecard at this point that let the employees run a report and see the status of all their assigned workbooks in one place along with how long it had been in the status. Productivity was never higher.

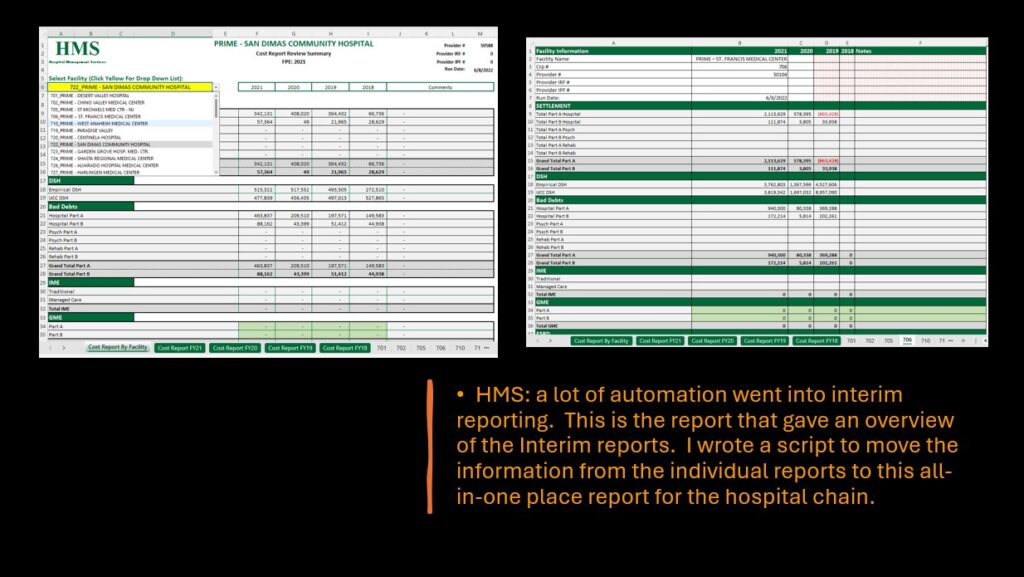

My next task was developing an Interim Review report that was previously done by the CEO for all HMS clients. This report took him about 2 months to complete, consisting of long hours and occasional errors in the manual data entry. There were 4 base reports that went into the interim reports. The report inputs came in the form of .txt, .csv, .xlsx, and .accdb. I developed scripts that dissected the various types of files and moved the needed numbers to the interim workbooks. When I was done with this project the reporting method created perfect information and the process could be done in 3 days’ time, freeing him up to work on other projects. I also created an overview of the Interim Reports by scripting a macro to pull the information from the Interim Reports and put it into a consolidated workbook that allowed viewing in one place and year to year comparisons

At this point we turned my attention to our server, and I oversaw the process of moving HMS data from our in-house server to the cloud. I researched HIPPA policies to make sure we were accommodating the feds requirements for stored patient data. We implemented 365 and SharePoint and started to introduce Azure. We used ShareFile to send encrypted information. This upgrade to the In house server involved me updating all the links that hooked files from the previous network within my code. I fought sync issues for a while too because some would not make sure they were properly synced to one drive. After researching the issue and finding out it was not all the employees not keeping up with their sync status, I discovered the sync issues were attributed to too many files on the SharePoint site and I would need to create a new SharePoint site to house the shared data/ reports. After the information was moved to the SharePoint site, I once again updated the file route links within all my code and the sync issues were remedied.

With cloud renovation we implemented DATTO a cloud storage back up solution. I was responsible for this application and restoring any needed backed up file from specified points in time.

I was also responsible for the Active Directory and keeping track of hardware and personnel. In some cases, I would modify the computers we purchased from Dell with graphics cards and in all cases I would set them up with the software HMS needed to do business with. Anytime there were issues with hardware or software I was first in line to deal with it or escalate it to our IT pro.

In this period, we also upgraded the phones. The ShoreTel phone server we were using was becoming outdated and Shortel wanted $15,000 to install a new server. We looked at other options and found a company called TPX that would rent the ip addresses to us for a SIP Trunk Migration. We used Mitel Business systems to use the TPX SIP trunks and got phones for a fraction of the price of a Shortel wanted.

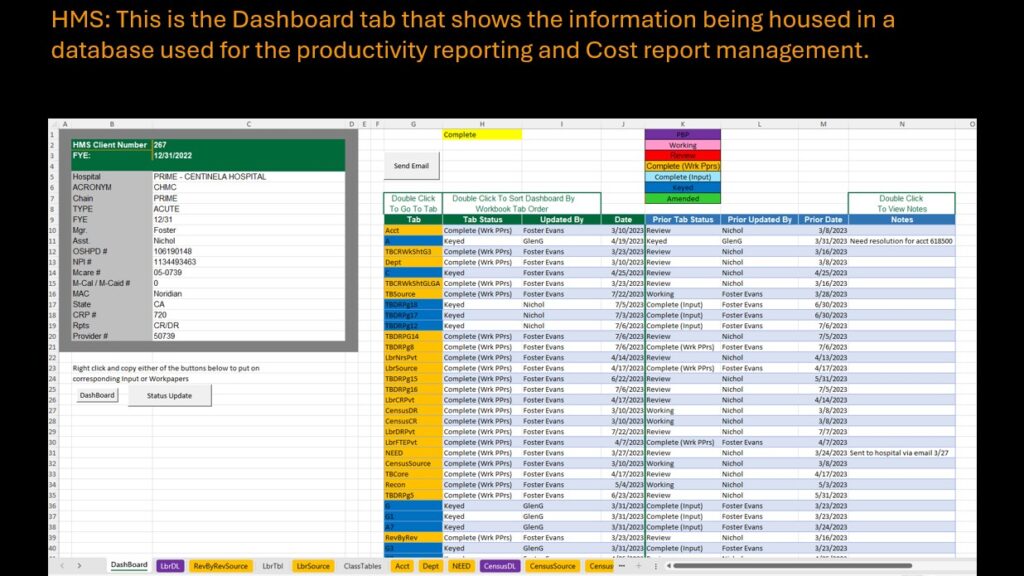

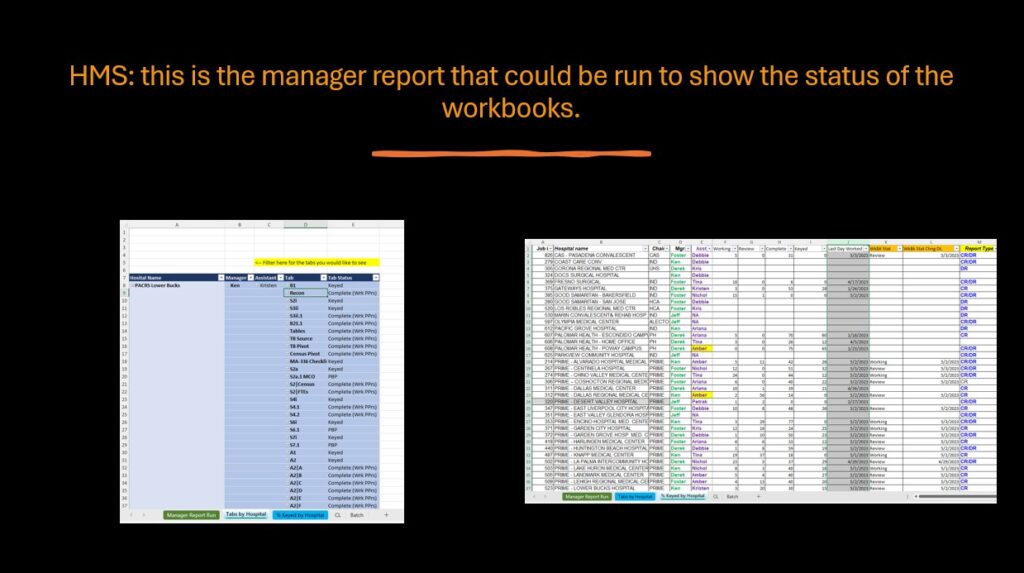

With all of the CEOs main goals accomplished in my free time I further developed the cost report workbook that grouped the Trial Balance, Census, and labor reports together. But in this rendition, I set out to measure the process involved. The method created made it easier to tell what needed to be worked on when the report changed hands from assistant to manager or manager to assistant. With the instruction from the CEO that it was critical that all hospital data lived in one place, I developed a new interactive Excel workbook. Every sheet within the workbook had a five-button control panel that dynamically updated the status of each tab, marking it as Working, Review, Completed, Source (DL), or Audit, also allowing for a comments section with notes for the reviewer. These selections also updated a dashboard tab 1st in tab order for a real-time overview of the workbook. Clicking a row in the dashboard instantly navigated users to the relevant section, making it easy to find exactly what they needed. But the real power came when the workbook closed. It automated three key actions: stripping unnecessary formatting to prevent corruption in SharePoint, sending notification emails to the right people based on status changes, and pushing an updated dashboard summary to a database for seamless reporting. The reporting tool, built within Excel, let the team filter data by employee, hospital, and status, tracking completion of work and the time each tab was in each status. This system was instrumental in keeping reporting on track and was one of the key reasons HMS was able to keep the same number of employees while servicing about 75 hospitals, more than twice as many as we started with, with minimal errors.

The one area that the CEO steered clear of, no matter how much I encouraged him to have me automate the process of the accountants’ work, he would with 100% certainty say that he did not want me to. He advised everyone that is not a manager, is learning how to do these reports and if I automated the entire process by creating a list of input numbers, no-one would know the workings of how the Cost Report was submitted. To make things even more enticing I got ahold of a VBA script that autoloaded the Cost Report input numbers directly into the federal approved input sheets that were uploaded to Centers for Medicare/Medicaid Services (CMS). To this day, I still wish we would have developed those scripts. At least, to check the work that everyone was doing, or do it in a way that walked them through the process of what was going on. In my younger years (Bank of America) I might have automated this process anyway, but that path left a bad taste in my mouth, and I have coded with 100% transparency since.

Everything the CEO had asked that I automate, I did. Covid hit and the workers all went home. The best part of work was going to the Office on Thursday because that was the day all the staff, for the most part, showed up. So, I was at home for four days of the week with my creations to run, the occasional IT responsibilities and nothing left to automate. This did allow a lot of free time and with that, given the opportunity to spend a lot of time with my family, it even contributed to making for more time to help my mom after my dad passed away.

As of 1/1/2023 HMS closed its doors and I have been looking for work sense. Last week I walked through the front door of businesses with resume in hand and explained the skills I have developed over the years. I also got the chance to talk about what their business does, and how they use data to drive the organization. For instance, I gave my resume to a business owner that held events, and he was saying that his staff had to send out a lot emails. With that information I went home and developed an email sending application in about 30 minutes with the help of ChatGPT. I Gave GPT the parameters of how I needed the input sheet to look (e.g. | First | Last |email | subject | body) and then fed code to GPT that I wrote in the past that was similar in handling email sends. With my first request to GPT I received a script that required zero debugging. I reached out to the business and left a message for the guy I spoke with letting him know that I had created an application to auto send email and had the ability to tweak the application to encompass any other data they would like to implement. This was on Thursday and I am still waiting to hear back.

In another connection I made from waking around, Picnic Time who made picnic baskets was looking for a controller. I expressed that I could do the controller job in a way no-one has done before and automate most of, if not all of the process involved. After receiving an email stating they were looking for someone that had done the job before I responded by email and included a few ideas for his business. Here is the section from the email:

I really appreciate you taking the time to speak with me. I totally understand where you’re coming from, though I have to admit I was looking forward to sending my family some Picnic Time baskets! I think they would’ve loved them.

I also spent some time thinking about ways I could help your business grow in other areas. For example, I can develop tools to improve logistics, cost-efficiency, and inventory management. One specific idea is an ordering system that factors in usage rates, quantity discounts, storage costs, and reorder schedules. The goal would be to ensure items are available for bundling without unnecessary overstock.

A formula for this could be:

Optimal Order Quantity = (Average Daily Usage × Lead Time) + (Backlog Buffer × Seasonal Demand Factor)

This would allow for strategic ordering, reducing waste while ensuring you never run out of key items. If you’re interested, I’d love to discuss how this could be tailored to your specific needs…

I am still waiting to hear back from this guy as well.